Funded by a Grand Challenges Impact Award from the University of Maryland, Values-Centered Artificial Intelligence (VCAI) focuses on developing artificial intelligence (AI) tools, theories and practices that align with community needs, ethics and human values. The team is developing applications in fields such as education, health care, transportation, communication and accessibility.

VCAI is one of 11 projects involving College of Education faculty that were funded by Grand Challenges Grants earlier this year. In all, the university awarded more than $30 million to 50 projects addressing pressing societal issues, including educational disparities, social injustice, climate change, global health and threats to democracy. The Grand Challenges Grants Program is the largest and most comprehensive program of its type in the university’s history.

Led by Hal Daumé III of the College of Computer, Mathematical, and Natural Sciences (CMNS), VCAI includes colleagues from the College of Education, College of Arts and Humanities, Robert H. Smith School of Business, College of Behavioral and Social Sciences, College of Information Studies and CMNS.

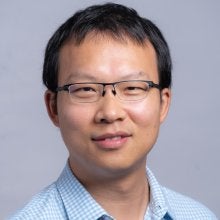

Jing Liu, assistant professor in education policy, is a team member working on VCAI. Liu explains how the initiative will put people and their needs and values at the center of AI development and application. He also shares his thoughts on the potential of AI to transform education in positive ways in the future.

What are some of the major concerns people have about ethics and AI?

One of the biggest concerns about AI is that it can deviate from human values and do things that are detrimental to society, such as replacing jobs or being biased or discriminatory.

How would a values-centered AI approach help to address these concerns?

VCAI brings community stakeholders to the center of AI development and application so that it addresses their needs and fundamental human values such as justice, dignity, self-efficacy, creativity and social connection. These stakeholders will also hold AI and the uses of AI accountable.

What potential does AI have to improve education?

There are lots of potential applications for AI in education, such as providing personalized learning and better interventions to students and giving teachers detailed feedback.

The idea of values-centered AI serves education very well. Education is very human centered; it’s about student-teacher interaction. That’s a fundamental process that helps students learn, grow and become responsible adults and members of society. AI shouldn’t be replacing human beings; it should be supporting teachers and students. People from education should have a loud voice in this process, and we need to center students and teachers. If that’s the case, there’s an opportunity to improve the effectiveness and equity of the entire education system.

Why is it important to bring colleagues with different types of expertise together to work on this initiative?

It’s exciting that this initiative is bringing together so many people from different disciplines, who were originally scattered across campus. It gives us the opportunity to work with colleagues who we would otherwise have been unlikely to talk to and to think about AI’s potential applications in new ways. Bringing people together from different backgrounds and disciplines to tackle this grand challenge is, in itself, really meaningful. If we can do this not only at UMD but across the world, it will generate reform in the AI industry.

Why does this issue matter to you personally?

I was trained at Stanford University in Silicon Valley, so from the very beginning of my academic career, I started to think about the role of technology. I fundamentally believe that human interaction is at the center of the learning process. I’m interested in how we can leverage AI, and technology in general, to support teachers and students.